During its annual Connect 2024 conference, Meta announced significant enhancements to its AI offerings, including upgraded features for its Llama model and innovative tools for social media engagement.

Meta, the tech giant known for its expansive social media platforms, unveiled a slew of new AI tools during its annual Meta Connect 2024 conference. The event, held in September, was marked by a series of announcements aimed at bolstering the company’s position in the increasingly competitive artificial intelligence arena.

Headlining the announcements was the introduction of enhancements to Meta’s AI, known as the Llama model, which CEO Mark Zuckerberg claimed is on track to becoming the most widely used AI model by the end of the year. Despite this ambitious claim, Meta faces criticism that its high user numbers stem largely from integrating its assistant features across all Meta-owned apps rather than through targeted usage by consumers, suggesting a disparity in genuine user engagement compared to dedicated AI applications like ChatGPT or Google’s Gemini.

One of the key updates introduced involves Meta Assistant’s expanded capabilities across its popular platforms—Messenger, WhatsApp, and Instagram. The AI is now able to edit photos and respond to voice commands, broadening its interactive functions. In an unusual twist, Meta has integrated celebrity-endorsed voices into its AI assistant, allowing users to receive responses from figures such as John Cena, Judi Dench, and Kristen Bell. This move seems aimed at broadening the appeal of their AI assistant to a wider, perhaps less tech-savvy, audience.

Meta also ventured into the realm of video content with new automatic dubbing capabilities for short-form vertical videos, initially supporting English and Spanish. This tool is designed to translate a creator’s speech and adjust visual cues to synchronize lip movements with the translated language, ostensibly preserving the unique voice and accent of the speaker. However, early demonstrations revealed this feature to still be in need of refinement, with translations often sounding mechanical when sentences lengthen.

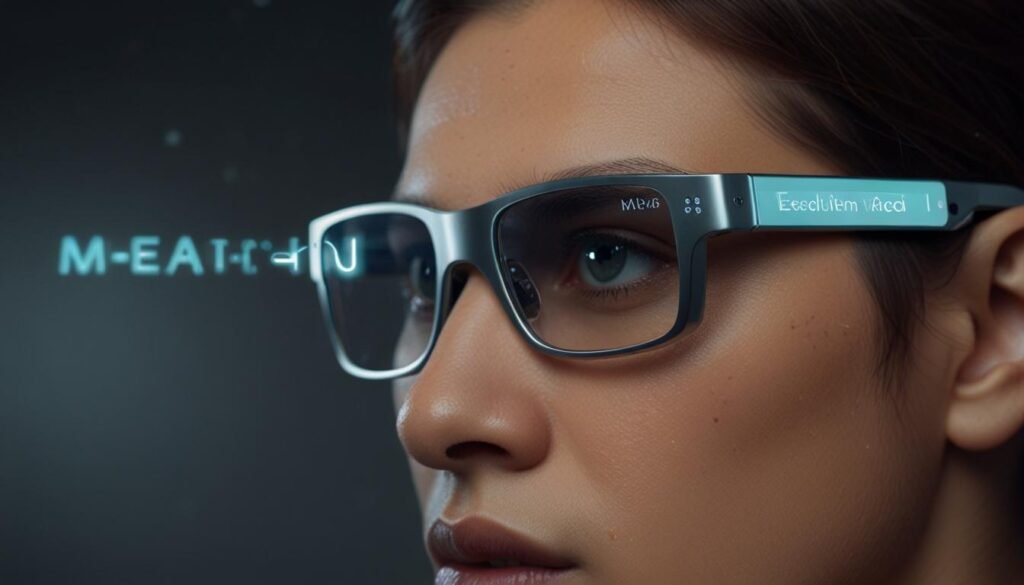

In line with Meta’s vision of further integrating AI into daily life, developments were announced for their smart glasses line. These devices now have the potential to offer live interaction with video and photo content, presenting applications that could range from real-time exercise feedback to on-the-spot sports performance coaching.

Additionally, the smart glasses aim to enhance communication with real-time language translation capabilities. Initially supporting French, Spanish, and Italian, these glasses could allow for seamless conversations through immediate auditory translations. Yet, given the nascent stage of such technology, it remains uncertain how effectively these solutions can perform under the rigours of day-to-day use.

Meta’s broader customer service AI offerings were bolstered with tools tailored for influencers, providing functionalities that allow AI-driven interaction with fans, including video calls using AI-generated personas of popular figures. While these innovations may streamline influencer-audience interactions, they could further blur the lines between personal and commodified communication.

Meta Connect 2024, showcasing these AI-fueled ambitions, painted a picture of a future where AI deeply intertwines with both communication and content creation. These innovations, while promising, underscore the perennial tech challenge of advancing AI reliability and user experience to meet the expectations set by Silicon Valley’s ever-evolving landscape.

Source: Noah Wire Services